The Ultimate Guide to Docker: Everything You Need to Know

Unlocking the Power of Docker: Exploring Its Advantages for Modern Development

Table of contents

- <mark>What Docker is?</mark>

- <mark>Benefits of using Docker, how it provides an edge for integrating and management the modules and pakages development.</mark>

- <mark>Understanding Docker</mark>

- <mark>Getting Started With Docker</mark>

- <mark>Dockerizing your application</mark>

- Happy containerization

- <mark>Best practices for Docker</mark>

What Docker is?

Docker is a platform that enables developers to build, package, and deploy applications as containers. Containers are lightweight, portable, and self-contained environments that run on top of a host operating system. Docker allows developers to create containers that include everything needed to run their applications, including the application code, dependencies, and system tools. These containers can then be easily moved between environments, making it easier to deploy and manage applications. Docker has gained popularity due to its ability to improve development efficiency, reduce deployment times, and provide consistent environments across different platforms.

Benefits of using Docker, how it provides an edge for integrating and management the modules and pakages development.

Portability: Docker containers are portable and can be run on any machine that has Docker installed, making it easier to move applications between environments such as development, testing, and production. This allows developers to create a consistent development and deployment environment, reducing the risk of errors and increasing application stability.

Faster Deployment: Docker containers can be quickly deployed because they include all the dependencies needed to run the application. This means that the application can be up and running in a matter of seconds, rather than hours or days required in traditional deployment models.

Resource Efficiency: Docker containers are lightweight and require fewer resources than traditional virtual machines. This allows developers to run multiple containers on the same machine, reducing infrastructure costs.

Consistent Environments: Docker ensures that the application runs in a consistent environment across different platforms, reducing the risk of errors and making it easier to troubleshoot issues.

Collaboration: Docker makes it easier for developers to collaborate by providing a shared environment for developing, testing, and deploying applications. This allows teams to work together more efficiently and reduces the risk of errors caused by differences in environments.

Understanding Docker

A. The basics of containerization

Containerization is a lightweight approach to virtualization that allows multiple isolated environments to run on a single host operating system. Containers provide an efficient and portable way to package and deploy applications, as they include everything needed to run the application, including the application code, dependencies, and system tools.

B. How Docker works

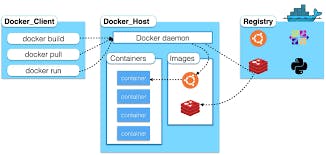

Docker uses a client-server architecture to manage containers. The Docker client communicates with the Docker daemon, which is responsible for building, running, and managing Docker containers. The Docker client can be run on the command line or through a graphical user interface, and provides a simple and consistent interface for managing containers.

C. The architecture of Docker

Docker uses a layered architecture that includes the following components:

Docker client: The user interface for interacting with Docker.

Docker daemon: The server component that manages Docker containers.

Docker images: A read-only template that includes the application code, dependencies, and system tools needed to run the application.

Docker containers: An instance of a Docker image that can be run and managed by the Docker daemon.

Docker registries: A centralized location for storing and sharing Docker images.

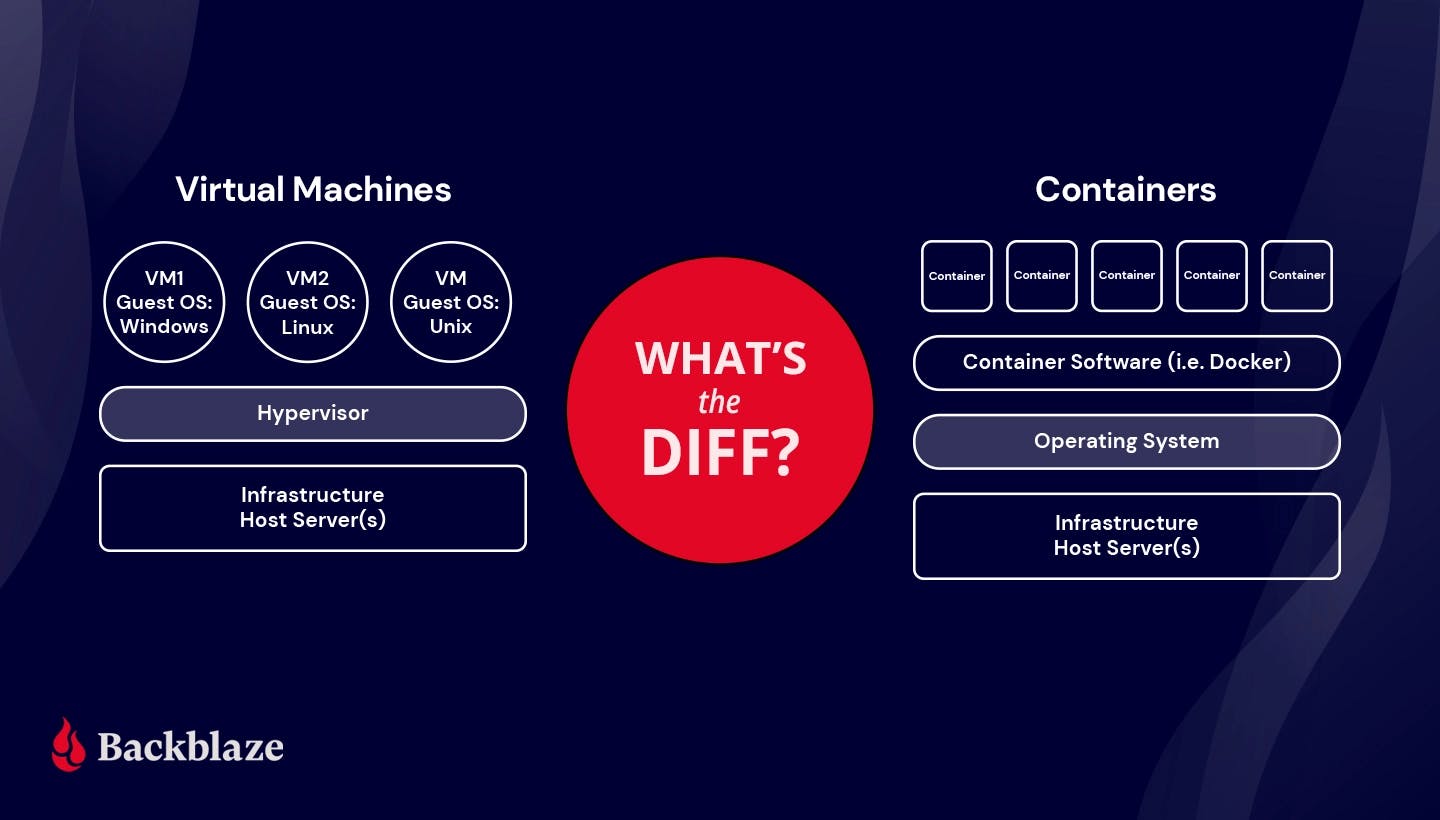

D. The difference between Docker and virtual machines

While both Docker and virtual machines provide isolation for running applications, they differ in their approach to virtualization. Virtual machines require a hypervisor to manage the hardware resources, and each virtual machine includes its own operating system. This makes virtual machines resource-intensive and slow to start up. In contrast, Docker containers share the host operating system, making them lightweight and quick to start up. This makes Docker more efficient than virtual machines, especially for running multiple containers on the same machine.

Getting Started With Docker

A. Installing Docker

To get started with Docker, you first need to install it on your system. Docker provides installation packages for Windows, macOS, and Linux. You can download the installation package from the Docker website and follow the installation instructions.

B. Creating and running a Docker container

To create a Docker container, you first need to define a Docker image. A Docker image is a read-only template that includes the application code, dependencies, and system tools needed to run the application.

Once you have created a Docker image, you can use the Docker run command to create a Docker container from the image. For example, the following command creates a new Docker container from the official nginx image:

docker run -d -p 80:80 nginx

This command runs the nginx container in detached mode, meaning that it runs in the background, and maps port 80 on the host to port 80 in the container.

C. Basic Docker commands

Docker provides a set of basic commands that you can use to manage Docker containers and images. Some of the most commonly used Docker commands include:

docker ps: Lists the running Docker containers.

docker images: Lists the Docker images available on the system.

docker start/stop/restart: Starts, stops, or restarts a Docker container.

docker rm: Removes a Docker container.

docker rmi: Removes a Docker image.

D. Building and pushing Docker images

To build a Docker image, you need to create a Dockerfile. A Dockerfile is a script that contains instructions for building the Docker image. Once you have created the Dockerfile, you can use the Docker build command to build the image.

For example, the following Dockerfile builds a simple Node.js application:

FROM node:10

WORKDIR /app

COPY . .

RUN npm install

CMD ["npm", "start"]

To build the image, you can run the following command:

docker build -t my-node-app .

Once you have built the Docker image, you can push it to a Docker registry to share it with others. Docker provides a public registry called Docker Hub, as well as private registries that you can use to store and share Docker images. To push an image to Docker Hub, you can use the Docker push command. For example:

docker push my-username/my-node-app

This command pushes the my-node-app image to the Docker Hub registry under the my-username account.

Dockerizing your application

A. Preparing your application for Docker

Before you can Dockerize your application, you need to prepare it for containerization. This involves ensuring that your application can run in a containerized environment and that all the necessary dependencies are included.

Some of the things you may need to do to prepare your application for Docker include:

Ensuring that your application listens on the correct network interface.

Updating your application's configuration to use environment variables.

Packaging any required dependencies or libraries with your application.

B. Creating a Dockerfile

Once your application is ready for Docker, you can create a Dockerfile. A Dockerfile is a script that contains instructions for building a Docker image. The Dockerfile specifies the base image, the application code, and any additional dependencies or configuration.

For example, the following Dockerfile creates an image for a simple Node.js application:

FROM node:10

WORKDIR /app

COPY package*.json ./

RUN npm install

COPY . .

EXPOSE 3000

CMD [ "npm", "start" ]

This Dockerfile specifies that the image should be based on the Node.js 10 image, and sets the working directory to /app. It then copies the package.json and package-lock.json files, installs the dependencies, copies the application code, exposes port 3000, and sets the default command to run the application using npm.

C. Building a Docker image

To build the Docker image, you can use the Docker build command. The build command reads the Dockerfile and builds an image from the instructions.

For example, to build the image based on the Dockerfile in the current directory and tag it with a name and version, you can use the following command:

docker build -t my-app:v1 .

This command builds the Docker image using the Dockerfile in the current directory, and tags it with the name my-app and version v1.

D. Running your Dockerized application

To run your Dockerized application, you can use the Docker run command. The run command creates a new container from the image and runs it.

For example, to run the my-app:v1 image, you can use the following command:

docker run -p 3000:3000 my-app:v1

This command runs the container in the foreground and maps port 3000 on the host to port 3000 in the container.

Once your application is running in a Docker container, you can use Docker commands to manage the container, such as stopping or restarting it, or to view logs and performance metrics.

Happy containerization

Best practices for Docker

A. Containerization best practices When containerizing your application with Docker, there are several best practices you should follow to ensure your containers are optimized and efficient. Some of these practices include:

Keeping containers small: By keeping containers small, you reduce their attack surface and make them easier to manage.

Running a single process per container: Running a single process per container helps keep containers simple and reduces complexity.

Using environment variables for configuration: By using environment variables for configuration, you make it easier to manage configuration across multiple containers and environments.

Storing data outside of containers: Data should be stored outside of containers to make it easier to manage and backup.

B. Security considerations for Docker When using Docker, it's important to take security into consideration. Some best practices for securing your Docker containers include:

Using trusted base images: Always use trusted base images and only use images from trusted sources.

Limiting container privileges: Containers should be run with the minimum privileges required to run the application.

Regularly updating containers: Keep your containers up-to-date with the latest security patches and updates.

Scanning for vulnerabilities: Use tools such as Docker Security Scanning to identify vulnerabilities in your containers.

C. Managing Docker images and containers As you start to use Docker in production, you'll need to manage your Docker images and containers. Some best practices for managing Docker images and containers include:

Keeping a consistent naming convention: Use a consistent naming convention for images and containers to make them easier to manage.

Tagging images: Tagging images makes it easier to identify different versions of an image.

Using container orchestration tools: Use container orchestration tools such as Kubernetes to manage your containers at scale.

D. Scaling Docker applications One of the benefits of Docker is the ability to easily scale your application horizontally. Some best practices for scaling Docker applications include:

Using load balancing: Use load balancing to distribute traffic across multiple containers.

Implementing automatic scaling: Use tools such as Kubernetes Horizontal Pod Autoscaler to automatically scale your application based on demand.

Monitoring resource usage: Monitor resource usage to ensure that you have enough resources available to handle traffic. Use tools such as Docker Stats or Prometheus to monitor resource usage.

By following these best practices, you can ensure that your Docker containers are secure, efficient, and easy to manage.